We all recognize emoji. They've become the global pop stars of digital communication. But what are they, technically speaking? And what might we learn by taking a closer look at these images, characters, pictographs… whatever they are ? (Thinking Face). We will dig deep to learn about how these thingamajigs work.

Please note: Depending on your browser, you may not be able to see all emoji featured in this article (especially the Tifinagh characters). Also, different platforms vary in how they display emoji as well. That's why the article always provides textual alternatives. Don't let it discourage you from reading though!

Further Reading on SmashingMag:

- Unicode For A Multi-Device World

- Designing For The Internet Of Emotional Things

- Giving Your Product A Soul

- All About Unicode, UTF8 & Character Sets

Now, let's start with a seemingly simple question.

What Are Emoji?

⛅

🎈

🌲 🏡 🌲🌲 🏃 🌲What we'll find is that they are born from, and depend on, the same technical foundation, character sets and document encoding that underlie the rest of our work as web-based designers, developers and content creators. So, we'll delve into these topics using emoji as motivation to explore this fundamental aspect of the web. We'll learn all about emoji as we go, including how we can effectively work them into our own projects, and we'll collect valuable resources along the way.

There is a lot of misinformation about these topics online, a fact made painfully clear to me as I was writing this article. Chances are you've encountered more than a little of it yourself. The recent release of Unicode 9 and the enormous popularity of emoji make now as good a time as any to take a moment to appreciate just how important this topic is, to look at it afresh and to fill in any gaps in our knowledge, large or small.

By the end of this article, you will know everything you need to know about emoji, regardless of the platform or application you're using, including the distributed web. What's more, you'll know where to find the authoritative details to answer any emoji-related question you may have now or in the future.

21 June 2016 brought the official release of Unicode Version 9.0, and with it 72 new emoji. What are they? Where do new emoji come from anyway? Why aren't your friends seeing the new ROFL, Fox Face, Crossed Fingers and Pancakes emoji you're sending them?! ? 😡 (Pouting Face emoji) Keep reading for the answers to these and many other questions.

A question to get us started: What is the plural form of the word "emoji"?

It's a question that came up in the process of reviewing and editing this article. The good news is that I have an answer! The bad news (depending on how bothered you are by triviality) is that the answer is that there is no definitive answer. I believe the most accurate answer that can be given is to say that, currently, there is no established correct form for the plural of emoji.

An article titled "What's the Plural of Emoji?" by Robinson Meyer, published by The Atlantic on 6 January 2016, discusses exactly this issue. The author turns up recent conflicting uses of both forms "emoji" and "emojis," even within the same national publications:

In written English right now, there's little consensus on this question. National publications have not settled on a regular style. The Atlantic, for instance, used both (emoji, emojis) in the last quarter of 2015. And in October alone in The New York Times, you could find the technology reporter Vindu Goel covering Facebook's "six new emoji," despite, two weeks later, Austin Ramzy detailing the Australian foreign minister's "liberal use of emojis." …

The Unicode Emoji Subcommittee, which, as we will see, is the group responsible for emoji in the Unicode Standard, uses "emoji" as the plural form. This plural form appears in passages of documentation quoted in this article. Consider, for example, the very first sentence of the first paragraph of the Emoji Subcommittee's official homepage at unicode.org:

Emoji are pictographs (pictorial symbols) that are typically presented in a colorful form and used inline in text. They represent things such as faces, weather, vehicles and buildings, food and drink, animals and plants, or icons that represent emotions, feelings, or activities.

I have chosen the plural "emoji", for the sake of consistency if nothing else. At this point in time, you can confidently use whichever form you prefer, unless of course the organization or individual for whom you're writing has strong opinions one way or the other. You can and should consult your style guide if necessary.

We'll start at the beginning, with the basic building blocks not just of emoji, nor even digital communication, but of all written language: characters and character sets.

Character Sets And Document Encoding: An Overview

Characters

A dictionary definition for a character will do to get us started: "A character is commonly a symbol representing a letter or number."

That's simple enough. But like so many other concepts, for it to be meaningful, we need to consider the broader context and put it into practice. Characters, in and of themselves, are not enough. I could draw a squiggle with a pencil on a piece of paper and rightfully call it a character, but that wouldn't be particularly valuable. Not only that, but it is difficult to convey a useful amount of information using a single character. We need more.

Character Sets

A character set is "a set of characters."

Expanding on that a bit, we can take a step back and consider a slightly more precise but still general description of a set: a group or collection of things that belong together, resemble one another or are usually found together.

Because we're dealing with sets in the context of computing, we can be a little more precise. In the field of computer science a set is: a collection of a finite number of values in no particular order, with the added condition that none of the values are repeated.

What's this about a collection? Technically speaking, a collection is a grouping of a number of items, possibly zero, that have some shared significance.

So, a character set is a grouping of some finite number of characters (i.e. a collection), in no particular order, such that none of the characters are repeated.

That's a solid, precise, if pedantic, definition.

The World Wide Web Consortium (W3C), the international community of member organizations that work together to develop standards for the web, has its own definition, which is not far from the generic one we've arrived at on our own.

From the W3C's "Character Encodings: Essential Concepts":

A character set or repertoire comprises the set of characters one might use for a particular purpose — be it those required to support Western European languages in computers, or those a Chinese child will learn at school in the third grade (nothing to do with computers).

So, any arbitrary set of characters can be considered a character set. There are, however, some well-known standardized character sets that are much more significant than any random grouping we might put together. One such standardized character set, unarguably the most important character set in use today, is Unicode. Again, quoting the W3C:

Unicode is a universal character set, i.e. a standard that defines, in one place, all the characters needed for writing the majority of living languages in use on computers. It aims to be, and to a large extent already is, a superset of all other character sets that have been encoded.

Text in a computer or on the Web is composed of characters. Characters represent letters of the alphabet, punctuation, or other symbols.

In the past, different organizations have assembled different sets of characters and created encodings for them — one set may cover just Latin-based Western European languages (excluding EU countries such as Bulgaria or Greece), another may cover a particular Far Eastern language (such as Japanese), others may be one of many sets devised in a rather ad hoc way for representing another language somewhere in the world.

Unfortunately, you can't guarantee that your application will support all encodings, nor that a given encoding will support all your needs for representing a given language. In addition, it is usually impossible to combine different encodings on the same Web page or in a database, so it is usually very difficult to support multilingual pages using ‘legacy' approaches to encoding.

The Unicode Consortium provides a large, single character set that aims to include all the characters needed for any writing system in the world, including ancient scripts (such as Cuneiform, Gothic and Egyptian Hieroglyphs). It is now fundamental to the architecture of the Web and operating systems, and is supported by all major web browsers and applications. The Unicode Standard also describes properties and algorithms for working with characters.

This approach makes it much easier to deal with multilingual pages or systems, and provides much better coverage of your needs than most traditional encoding systems.

Aside: This W3C document provides a number of understandable and useful definitions relevant to our discussion, so we'll come back to it a few times early in the article.

We just learned that the Unicode Consortium is the group responsible for the Unicode Standard. From their website:

The Unicode Consortium enables people around the world to use computers in any language. Our freely-available specifications and data form the foundation for software internationalization in all major operating systems, search engines, applications, and the World Wide Web. An essential part of our mission is to educate and engage academic and scientific communities, and the general public.

They provide the following answer to the question, what is Unicode?:

Unicode provides a unique number for every character, no matter what the platform, no matter what the program, no matter what the language.

Fundamentally, computers just deal with numbers. They store letters and other characters by assigning a number for each one. Before Unicode was invented, there were hundreds of different encoding systems for assigning these numbers. No single encoding could contain enough characters: for example, the European Union alone requires several different encodings to cover all its languages. Even for a single language like English no single encoding was adequate for all the letters, punctuation, and technical symbols in common use.

These encoding systems also conflict with one another. That is, two encodings can use the same number for two different characters, or use different numbers for the same character. Any given computer (especially servers) needs to support many different encodings; yet whenever data is passed between different encodings or platforms, that data always runs the risk of corruption.

Unicode provides a unique number for every character, no matter what the platform, no matter what the program, no matter what the language. … The emergence of the Unicode Standard, and the availability of tools supporting it, are among the most significant recent global software technology trends.

In short, Unicode is a single (very) large set of characters designed to encompass "all the characters needed for writing the majority of living languages in use on computers." As such, it "provides a unique number for every character, no matter what the platform, no matter what the program, no matter what the language."

Both the W3C and Unicode Consortium use the term "encoding" as part of their definitions. Descriptions like that, helpful as they may be, are a big part of the reason why there is often confusion around what are in fact simple concepts. Encoding is a more involved, difficult-to-grasp concept than character sets, and one we'll discuss shortly. Don't worry about encoding quite yet; before we get from character sets to encoding, we need one more step.

Coded Character Sets

Going back to the same "Character Encodings: Essential Concepts" document, the W3C has something to say about coded character sets, too:

A coded character set is a set of characters for which a unique number has been assigned to each character. Units of a coded character set are known as code points. A code point value represents the position of a character in the coded character set. For example, the code point for the letter ‘à' in the Unicode coded character set is 225 in decimal, or E1 in hexadecimal notation. (Note that hexadecimal notation is commonly used for referring to code points…)

Note: There is an unfortunate mistake in the passage above. The character displayed is "à" and the location given for that symbol in the Unicode coded character set is 225 in decimal, or E1 hexadecimal notation. But 225 (dec) / E1 (hex) is the location of "á," not "à," which is found at 224 (dec) / E0 (hex). Oops! ? 😒 (Unamused Face emoji)

That isn't too difficult to understand. Being able to describe any one character with a numeric code is convenient. Rather than writing "the Latin script letter ‘a' with a diacritic grave," we can say \xE0, the hexadecimal notation for the numeric location of that symbol ("à") in the coded character set known as Unicode. Among other advantages of this arrangement, we can look up that character without having to know what "Latin script letter ‘a' with a diacritic grave" means. The natural-language way of describing a character can be awkward for us, even more so for computers, which are both much better at looking up numeric references than we are and much worse at understanding natural-language descriptions.

So, a coded character set is simply a way to assign a numeric code to every character in a set such that there is a one-to-one correspondence between character and code. With that, not only is the Unicode Consortium's description of Unicode more understandable, but we're ready to tackle encoding.

⛅

🎈

🌲 🏡 🏃 🌲🌲 🌲Encoding

We've quickly reviewed characters, character sets and coded character sets. That brings us to the last concept we need to cover before turning our attention to emoji. Encoding is both the hardest concept to wrap our heads around and also the easiest. It's the easiest because, as we'll see, in a practical sense, we don't need to know all that much about it.

We've come to an important point of transition. Character sets and coded character sets are in the human domain. These are concepts that we must have a good grasp of in order to confidently and effectively do our work. When we get to encoding, we're transitioning into the realm of the computing devices and, more specifically, the low-level storage, retrieval and transmission of data. Encoding is interesting, and it is important that we get right what little of it we are responsible for, but we need only a high-level understanding of the technical details in order to do our part.

The first thing to know is that "character sets" and "encodings" (or, for our purpose here, "document encodings") are not the same thing. That may seem obvious to you, especially now that we're clearly discussing them separately, but it is a common source of confusion. The relationship is a little easier to understand, and keep straight, if we think of the latter as "character set encodings."

It's back to the W3C's "Character Encodings: Essential Concepts" for a definition of encoding to get us started:

The character encoding reflects the way the coded character set is mapped to bytes for manipulation by a computing device.

In the table below, which reproduces the same information from a graphic appearing in the W3C document, the first 4 characters and corresponding code points are part of the Tifinagh alphabet, and the fifth is the more familiar exclamation point.

Aside: The exclamation point is only more familiar for those of us who use the Latin alphabet or one of the other languages that have adopted it, including Russian, Chinese, Korean and Japanese, among others.The Tifinagh alphabet is associated with Berber script and languages and dialects used by people indigenous to North Africa. It's worth considering that the exclamation point might look as foreign to them as the Tifinagh letter yaz (ⵣ) looks to us.

| Character (glyph) | Hexadecimal representation of Unicode code point | UTF-8 encoding (bytes in memory) |

|---|---|---|

| ⴰ | 2D30 | E2 B4 BO |

| ⵣ | 2D63 | E2 B5 A3 |

| ⵓ | 2D53 | E2 B5 93 |

| ⵍ | 2D4D | E2 B5 8D |

| ! | 21 | 21 |

The table shows, from left to right, the symbol itself, the corresponding code point and the way the code point maps to a sequence of bytes using the UTF-8 encoding scheme. Each byte in memory is represented by a two-digit hexadecimal number. So, for example, in the first row we see that the UTF-8 encoding of the Tifinagh letter ya (ⴰ) requires 3 bytes of storage (E2 B4 BO).

Important: It may be that you are not seeing the Tifinagh characters in that table at all, but rather generic placeholders. That does not mean the article (or the information in it) is broken. 😥 (Disappointed but relieved face emoji)

There is a perfectly good explanation for those placeholders. Moreover, they are not just any old generic character. They serve a purpose, and each has a meaning. If you are desperate to know more, you can jump right to the glyphs section of this article where you will find more information. But I'd recommend that you just keep reading, and we'll get there soon enough.If those characters are missing for you, and you want to know what they look like, the Wikipedia entry for Tifinagh includes images representing each as well as the characters themselves. That's as good a place to see them as any other.

There are two important points to take away from the information in this table:

First, encodings are distinct from the coded character sets. The coded character set is the information that is stored, and the encoding is the manner in which it is stored. (Don't worry about the specifics.)

Secondly, note how under the UTF-8 encoding scheme the Tifinagh code points map to three bytes, but the exclamation point maps to a single byte.

Although the code point for the letter à in the Unicode coded character set is always 225 (in decimal), in UTF-8 it is represented in the computer by two bytes. … there isn't a trivial, one-to-one mapping between the coded character set value and the encoded value for this character. … the letter à can be represented by two bytes in one encoding and four bytes in another.

The encoding forms that can be used with Unicode are called UTF-8, UTF-16, and UTF-32.

The W3C's explanation is accurate, concise, informative and, for many readers, clear as mud. At this point, we're dealing with pretty low-level stuff. Let's keep pushing ahead; as is often the case, learning more will give us the context we need to better understand what we've already seen.

UTF is a set of encodings specifically created for the implementation of Unicode. It is part of the core specification of Unicode itself.

The Unicode Consortium maintains an official website for Unicode 9.0 (as well as all previous versions of the specification). A PDF of the core specification was just recently published to the website in August 2016. You'll find the discussion of UTF in "Section 2.5: Encoding Forms."

Computers handle numbers not simply as abstract mathematical objects, but as combinations of fixed-size units like bytes and 32-bit words. A character encoding model must take this fact into account when determining how to associate numbers with the characters.

Actual implementations in computer systems represent integers in specific code units of particular size—usually 8-bit (= byte), 16-bit, or 32-bit. In the Unicode character encoding model, precisely defined encoding forms specify how each integer (code point) for a Unicode character is to be expressed as a sequence of one or more code units. The Unicode Standard provides three distinct encoding forms for Unicode characters, using 8-bit, 16-bit, and 32-bit units. These are named UTF-8, UTF-16, and UTF-32, respectively. The "UTF" is a carryover from earlier terminology meaning Unicode (or UCS) Transformation Format. Each of these three encoding forms is an equally legitimate mechanism for representing Unicode characters; each has advantages in different environments.

Note: These encoding forms are consistent from one version of the specification to the next. In fact, their stability is vital to maintaining the integrity of the Unicode standard. Whatever we read about the encoding forms in the Version 9.0 specification was true of Version 8.0 as well, and will hold going forward.

The Unicode specification discusses at length the pros and cons and preferred usage of these three forms — UTF-8, UTF-16 and UTF-32 — endorsing the use of all three as appropriate. For the purposes of this brief discussion of UTF encoding, it's enough to know the following:

- UTF-8 uses 1 byte to represent characters in the ASCII set, 2 bytes for characters in several more alphabetic blocks, 3 bytes for the rest of the BMP, and 4 bytes as needed for supplementary characters.

- UTF-16 uses 2 bytes for any character in the BMP, and 4 bytes for supplementary characters.

- UTF-32 uses 4 bytes for all characters.

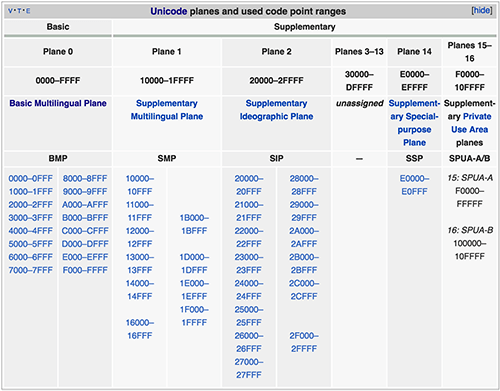

Aside: What is the BMP? We've said that Unicode is a very large character set intended to encompass every other character set. A plane is a part of the organizational structure of Unicode consisting of a contiguous group of 65,536 (216) code points. There are 17 such planes. Let's do a little math to fill out the picture…

65,536 code points * 17 planes = 1,114,112 code points

So, there are theoretically 1,114,112 possible code points without extending this basic arrangement.

Note: Although it would technically be possible to expand the code point space that uses UTF-8 encoding, because of the changes that would require and the Unicode Consortium's stability policies, which are intended to ensure continuity and backward-compatibility, the space will probably not be expanded within the period of time anyone is likely to be reading this article. If I'm wrong about that, let me say hello to you, future reader. 👋 (Waving Hand Sign emoji) How are things? I hope you've figured out how to clean up some of the mess we've created in the world today. (I assure you that many of us feel bad about it. 😔 (Pensive Face emoji))

The BMP is plane 0 (i.e. the first plane). What is the significance, if any, of the BMP? The BMP alone contains most of the characters of all modern languages. The arrangement of the BMP is nicely visualized in the chart below, taken from the Wikipedia entry for Unicode planes, in which the BMP is shown as a series of boxes such that each box represents 256 code points (16 rows × 16 columns × 256 code points per box = 65,536 code points).

Let's quickly look at where some actual characters would fit into this table. Take, for example, the Latin script letter "a". The code point for "a" is U+0061, which puts it in box 00. That is to say, it is one of 256 characters represented in the chart by box 00. The code points for these characters are in the range of U+0000 (assigned to the NULL character) and U+00FF (the code point for "ÿ," the Latin lowercase letter "y" with a diaeresis). These 256 characters comprise the first two Unicode blocks, referred to as "C0 Controls and Basic Latin" (U+0000 to U+007F) and "C1 Controls and Latin-1 Supplement" (U+0080 to U+00FF). It is here where you will find the 52 letters of the ISO basic Latin alphabet (a to z, A to F) and the rest of the characters from the US-ASCII encoding standard. It is also home to the code points corresponding to characters from ISO 8859-1 (Latin-1), with its many accented characters for representing Western European languages (Italian, French, Spanish, German, Portuguese and Dutch, among others). For a more detailed look at these blocks, refer to the Wikibooks Unicode character reference for 0000 to 0FFF.

This is a good illustration both of what it means and of the way in which Unicode encompasses other character sets. Those other sets — disparate, overlapping, isolated from one another, and easily confused — are carried over and integrated as part of Unicode. The importance of Unicode simply cannot be overstated. (It would not be possible for me to write this article, or for you to read it, were it not for Unicode.)

What about the Ethiopic syllable "ቈ" (QWA)? That's code point U+1248, box 12, which the chart tells us contains African scripts. You'll notice that, as you might expect, most of the space in the BMP is allocated to code points for Chinese, Japanese and Korean characters.

You might ask, "Are 17 planes and 1,114,112 code points a lot?"

Beyond the BMP, the majority of planes are entirely unassigned. Only six, including the BMP, have any assigned characters at all.

There are "only" 120,672 Unicode characters in Unicode Version 8, released on 17 June 2015. Version 9.0, the current version, which was officially released 21 June 2016, adds 7,500 additional characters, for a total of 128,172. If the Unicode Consortium adds an average of 7,500 characters a year, it would take approximately 131 years to fill the code space. That's assuming we don't run out of characters first. So, there is certainly plenty of space left… for future emoji. 😁 (Grinning Face with Smiling Eyes emoji)

Speaking of emoji, where do they fit in? As an example, let's look at 🐘 (Elephant emoji). The code point for the Elephant emoji is U+1F418. That's in the BMP, and it fits into figure 1 at box 1F, an address space generally reserved for non-Latin European scripts. The elephant was part of the original set of emoji implemented in Unicode Version 6. A more recent addition ? (Unicorn Face emoji), introduced in Version 8.0, is at U+1F984.

For those of you who have never before given much thought to Unicode, and character encodings more generally, or have been confused or intimidated by the topic, I hope you're already beginning to see that it's not so very hard to figure out.

From the brief description of storage requirements for the various UTF encodings above, you might guess that UTF-8 is more complicated to implement (owing to the fact that it is not fixed-width) but more space efficient than say UTF-32, which is more regular but less space-efficient, with every character taking up exactly 4 bytes.

| Encoding | ⴰ | ⵣ | ⵓ | ⵍ | ! |

|---|---|---|---|---|---|

| UTF-8 | E2 B4 BO | E2 B5 A3 | E2 B5 93 | E2 B5 8D | 21 |

| UTF-16 | 2D 30 | 2D 63 | 2D 53 | 2D 4D | 00 21 |

| UTF-32 | 00 00 2D 30 | 00 00 2D 63 | 00 00 2D 53 | 00 2D 4D | 00 00 00 21 |

Ignoring the Berber characters and focusing on the exclamation point in the rightmost column, we see that the same character would take up a single byte in UTF-8, 2 bytes (two times the storage) in UTF-16, and 4 bytes (four times the storage) in UTF-32. That's three very different amounts of storage to convey the exact same information. Multiply that difference in storage requirements by the size of the web, estimated to be at least 4.83 billion pages currently, and it's easy to appreciate that the storage requirements of these encodings is not an inconsequential consideration.

Whether or not that all made sense to you, here's the good news…

When dealing with HTML, the character set we'll use is Unicode, and the character encoding is always UTF-8. It turns out that that's all we'll ever need to concern ourselves with. 😌 (Relieved Face emoji, U+1F60C)

Regardless, it's no less important to be aware of the general concepts, as well as the simple fact that there are other character sets and encodings.

Now, we can bring all of this to the context of the web, and start working our way toward emoji.

⛅

🎈

🌲 🏡 🏃 🌲🌲 🌲Declaring Character Sets And Document Encoding On the Web

We need to tell user agents (web browsers, screen readers, etc.) how to correctly interpret our HTML documents. In order to do that, we need to specify both the character set and the encoding. There are two (overlapping) ways to go about this:

- utilizing HTTP headers,

- declaring within the HTML document itself.

A brief aside about HTML5, WHATWG and W3C: This is hardly an article about HTML5, much less the sort of in-depth treatment of HTML5 that would warrant a discussion of its history and the complicated technical and political issues surrounding this critically important standard. Regardless, not only do I mention HTML5 and show fragments of markup, but I refer to the HTML5 specification (more than once), and that probably crosses the line, requiring me to say at least a little something about what has become a thorny issue.It is not my intention to add anything new to this discussion. (I wouldn't be one to do that, by any stretch.) I've said before and will say again that I believe the distributed web is the single best piece of evidence that we can do good on a global scale. So, I genuinely care about this stuff. I want to get it right, and I also want to be respectful of the hard work, dedication and passion of all parties involved.

First, there are two HTML5 specifications, one the work of the WHAT and the other maintained by the W3C. The WHATWG's own FAQ says as much:

Which group has authority in the event of a dispute?

The two groups have different specs, so each has authority over its spec. The specs can and have diverged on some topics; unfortunately, these differences are not documented anywhere.

Setting aside for a brief moment the issue of what it means in practice to have two divergent specifications ostensibly describing the same standard, not an insignificant issue, the situation seems clear enough. There are two separate specifications, and two different organizations, each authoritative over its own work. The situation is much less straightforward than that would make it seem. However, the complications are what allow us to make some sense of the notion that there are two specifications for one standard. Before we get to the current state of affairs, we need a brief history lesson.

Rather than retell the history myself (badly), I'll let the WHATWG and W3C do it. The current versions of both specifications include a history section that very nearly match. The WHATWG spec is published as a continually updated "Living Standard." The W3C spec, on the other hand, follows a rigorous publication process, moving through distinct phases: candidate recommendation (CR), proposed recommendation (PR) and W3C recommendation (REC). Most recently, the "HTML 5.1: W3C Proposed Recommendation" was published on 15 September 2016.

It is these 2 specs, the WHATWG's "Living Standard" for HTML and the W3C's HTML 5.1 candidate Recommendation, that I'll be referring to here.

It would be excessive to copy and paste the entire history section of both specifications. So, please go ahead and read about the history from the source for yourself by following one of the links above. They are very nearly identical. In fact, let's look at the passages that differ the most between the two:

From WHATWG's version of the spec:

For a number of years, both groups then worked together. In 2011, however, the groups came to the conclusion that they had different goals: the W3C wanted to publish a "finished" version of "HTML5", while the WHATWG wanted to continue working on a Living Standard for HTML, continuously maintaining the specification rather than freezing it in a state with known problems, and adding new features as needed to evolve the platform.

Since then, the WHATWG has been working on this specification (amongst others), and the W3C has been copying fixes made by the WHATWG into their fork of the document (which also has other changes).

Here is the corresponding passage from the W3C's HTML 5.1 candidate recommendation:

Since then, the W3C HTML WG has been cherry picking patches from the WHATWG that resolved bugs registered on the W3C HTML specification or more accurately represented implemented reality in user agents. At time of publication of this document, patches from the WHATWG HTML specification have been merged until January 12, 2016. The W3C HTML editors have also added patches that resulted from discussions and decisions made by the W3C HTML WG as well a bug fixes from bugs not shared by the WHATWG.

That gives us a very quick summary. After a period of officially working together, these two standards bodies have parted ways. However, there is still an awkward collaboration of sorts on the HTML5 standard itself. The WHATWG works on its specification, rolling in changes continually. Much like a modern evergreen operating system (OS) or application with an update feature, the latest changes are incorporated without waiting for the next official release. This is what the WHATWG means by "living standards," which it describes as follows:

This means that they are standards that are continuously updated as they receive feedback, either from Web designers, browser vendors, tool vendors, or indeed any other interested party. It also means that new features get added to them over time, at a rate intended to keep the specifications a little ahead of the implementations but not so far ahead that the implementations give up.

Despite the continuous maintenance, or maybe we should say as part of the continuing maintenance, a significant effort is placed on getting the specifications and the implementations to converge — the parts of the specification that are mature and stable are not changed willy nilly. Maintenance means that the days where the specifications are brought down from the mountain and remain forever locked, even if it turns out that all the browsers do something else, or even if it turns out that the specification left some detail out and the browsers all disagree on how to implement it, are gone. Instead, we now make sure to update the specifications to be detailed enough that all the implementations (not just browsers, of course) can do the same thing. Instead of ignoring what the browsers do, we fix the spec to match what the browsers do. Instead of leaving the specification ambiguous, we fix the the [sic] specification to define how things work.

For its part, the W3C will from time to time package these updates (at least some of them), as well as its own changes possibly, to arrive at a new version of its HTML 5.x standard.

Assuming that the WHATWG process works as advertised — and that may be a pretty good assumption considering that many of the people directly involved with the WHATWG also work for the organizations responsible for the implementation of the standard (e.g. Apple, Google, Mozilla and Opera) — the best strategy is probably to refer to the WHATWG spec first. That is what I have done in this article. Where I quote from an HTML5 spec, I am referencing the WHATWG specification. I do, however, make use of informational documents from the W3C throughout the article because they are helpful and not inconsistent with either spec.

Honestly, for our purposes here, it hardly matters. The sections I pull from are nearly (though not strictly) identical. But I suppose that's really part of the problem, rather than evidence of cohesiveness. To get a sense of just how messy the situation is, take a look at the "Fork Tracking" page on the WHATWG's wiki.

content-type HTTP Header Declaration

As long as we're talking about the web, there's good reason to believe that the W3C has something to say about the topic:

When you retrieve a document, a web server sends some additional information. This is called the HTTP header. Here is an example of the kind of information about the document that is passed as part of the header with a document as it travels from the server to the client.

HTTP/1.1 200 OK

Date: Wed, 05 Nov 2003 10:46:04 GMT

Server: Apache/1.3.28 (Unix) PHP/4.2.3

Content-Location: CSS2-REC.en.html

Vary: negotiate,accept-language,accept-charset

TCN: choice

P3P: policyref=http://www.w3.org/2001/05/P3P/p3p.xml

Cache-Control: max-age=21600

Expires: Wed, 05 Nov 2003 16:46:04 GMT

Last-Modified: Tue, 12 May 1998 22:18:49 GMT

ETag: "3558cac9;36f99e2b"

Accept-Ranges: bytes

Content-Length: 10734

Connection: close

Content-Type: text/html; charset=UTF-8

Content-Language: enIf your document is dynamically created using scripting, you may be able to explicitly add this information to the HTTP header. If you are serving static files, the server may associate this information with the files. The method of setting up a server to pass character encoding information in this way will vary from server to server. You should check with the documentation or your server administrator.

Without getting too heavily into the details, while still coming away from the discussion with some sense of how this works, let's clarify some of the terminology.

HTTP is the network application protocol underlying communication on the web. (The same protocol is also used in other contexts, but was originally designed for the web.) HTTP is a client-server protocol and facilitates communication between the software making a request (the client) and the software fulfilling or responding to the request (the server) by exchanging request and response messages. These messages all must follow a well-defined, standardized structure so that they can be anticipated and interpreted properly by the recipient.

Part of this structure is a header providing information about the message itself, about the capabilities or requirements of the originator or of the recipient of the message, and so on. The header consists of a number of individual header lines. Each line represents a single header field comprising a lone key-value pair. One of the approximately 45+ defined fields is Content-Type, which identifies both the encoding and character set of the content of the message.

Note: The Internet Engineering Task Force (IETF) is the group responsible for developing and maintaining many of the open standards that are the foundation of communication on the Internet, including HTTP. The IETF documents the HTTP standard, as well as their other work, in a series of formal specifications referred to as "Request for Comments" (RFCs).

In the example above, among the header lines, we see:

Content-Type: text/html; charset=UTF-8The field contains two pieces of information.

The first is the media type, text/html, which identifies the content of the message as an HTML document, which a web server can process directly. There are other media types, like application/pdf (a PDF document), which generally need to be handled differently.

The second piece of information is the document encoding and character set, charset=UTF-8. As we've already seen, UTF-8 is exclusively used with Unicode. So UTF-8 alone is enough to identify both the encoding and character set.

You can view these HTTP headers yourself. Options for doing so include, among others:

- Browser development tools

- web-based tools

Checking HTTP Headers Using A Browser’s Developer Tools

Checking HTTP Headers with Firefox (Recent Versions)

- Open the "Web Console" from the "Tools" → "Web Developer" menu.

- Select the "Network" tab in the pane that appears (at the bottom of the browser window, if you haven't changed this default).

- Navigate to a web page you'd like to inspect. You'll see a list consisting of all resources that contribute to the page fill-in, including the root document, at the top (with component resources listed underneath).

- Select any one of these resources from the list for which you'd like to look at the accompanying headers. (The pane should split.)

- Select the "headers" tab in the new pane that appears.

- You'll see response and request headers corresponding to both ends of the exchange, and among the response headers you should find the `Content-Type` field.

Checking HTTP Headers with Chrome (Recent Versions)

- Open the "Developer Tools" from the "View" → "Developer" menu.

- Select the "Network" tab in the pane that appears (at the bottom of the browser window, if you haven't changed this default).

- Navigate to a web page you'd like to inspect. You'll see a list of all resources that contribute to the page fill-in, including the root document, at the top (with component resources listed underneath).

- Select any one of these resources from the list for which you'd like to look at the accompanying headers. (The pane should split.)

- Select the "headers" tab in the new pane that appears.

- You'll see response and request headers corresponding to both ends of the exchange, and among the response headers you should find the `Content-Type` field.

Checking HTTP Headers Using Web-Based Tools

Many websites allow you to view the HTTP headers returned from the server for any public website, some much better than others. One reliable option is the W3C's own Internationalization Checker.

Simply type a URL into the provided text-entry field, and the page will return a table with information related to the internationalization and language of the document at that address. You should see a section titled "Character Encoding," with a row for the "HTTP Content-Type" header. You're hoping to see a value of utf-8.

Using A Meta Element With charset Attribute

We can also declare the character set and encoding in the document itself. More specifically, we can use an HTML meta element to specify the character set and encoding.

There are two different, widely used, equally valid formats (both interpreted in exactly the same way).

There is the older HTML 4.x format:

<meta http-equiv="Content-Type" content="text/html; charset=utf-8">

And the newer, equivalent, HTML5 version:

<meta charset="UTF-8">

The latter form is shorter and, so, easier to type and harder to get wrong accidentally, and it takes up less space. For these reasons, it's the one we should use.

It might occur to you to ask (or not), "If the browser needs to know the character set and encoding before it can read the document, how can it read the document to find the meta element and get the value of the charset attribute?"

That's a good question. How clever of you. 🐙 (Octopus emoji, U+1F419 — widely considered to be among the cleverest of all animal emoji) I probably wouldn't have thought to ask that. I had to learn to ask that question. (Sometimes it's not just the answers we need to learn, but the questions as well.)

For the answer to this apparent riddle, I'll quote an often-cited blog post by Joel Spolsky on the topic of character sets and encoding, titled "The Absolute Minimum Every Software Developer Absolutely, Positively Must Know About Unicode and Character Sets (No Excuses!)":

It would be convenient if you could put the Content-Type of the HTML file right in the HTML file itself, using some kind of special tag. Of course this drove purists crazy… how can you read the HTML file until you know what encoding it's in?! Luckily, almost every encoding in common use does the same thing with characters between 32 and 127, so you can always get this far on the HTML page without starting to use funny letters:

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8">

But that meta tag really has to be the very first thing in the <head> section because as soon as the web browser sees this tag it's going to stop parsing the page and start over after reinterpreting the whole page using the encoding you specified.

Notice the use of the older-style meta element. This post is from 2003 and, therefore, predates HTML5.

That makes sense, right? Let's also take a look at what the HTML spec has to say about it. From the WHATWG HTML5 spec on "Specifying the Document's Character Encoding":

The element containing the character encoding declaration must be serialized completely within the first 1024 bytes of the document.

In order to satisfy this condition as safely as possible, it's best practice to have the meta element specifying the charset as the first element in the head section of the page.

This means that every HTML5 document should begin very much like the following (the only differences being the title text, possibly the value of the lang attribute, the use of white space, single versus double quotation marks around attribute values, and capitalization).

<!doctype html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>Example title</title>

</head>

<body></body>

</html>Now you might be thinking, "Great, I can specify the character set and encoding in the HTML document. I'd much rather do that than worry about HTTP header field values."

I don't blame you. But that begs the question, "What happens if the character set and encoding are set in both places?"

Maybe somewhat surprisingly the information in the HTTP headers takes precedence. Yes, that's right, the meta element and charset attribute do not override the HTTP headers. (I think I remember being surprised by this.) 🙁 (Slightly Frowning Face emoji, U+1F641)

The W3C tells us in "Declaring Character Encodings in HTML":

If you have access to the server settings, you should also consider whether it makes sense to use the HTTP header. Note however that, since the HTTP header has a higher precedence than the in-document meta declarations, content authors should always take into account whether the character encoding is already declared in the HTTP header. If it is, the meta element must be set to declare the same encoding.

There's no getting away from it. To avoid problems, we should always make sure the value is properly set in the HTTP header as well as in the HTML document.

An Encoding By Any Other Name

Before we finish with character sets and encoding and move on to emoji, there are two other complications to consider. The first is as subtle as it is obvious.

It's not enough to declare a document is encoded in UTF-8 — it must be encoded in UTF-8! To accomplish this, your editor needs to be set to encode the document as UTF-8. It should be a preference within the application.

Say, I have a joke for you… What time is it when you have an editor that doesn't allow you to set the encoding to UTF-8?

Punchline: Time to get a new editor! 🙄 (Face with rolling eyes emoji, U+1F644)

So, we've specified the character set and encoding both in the HTTP headers and in the document itself, and we've taken care that our files are encoded in UTF-8. Now, thanks to Unicode and UTF-8, we can know, without any doubt, that our documents will be interpreted and displayed properly for every visitor using any browser or other user agent on any device, running any software, anywhere in the world. But is that true? No, it's not quite true.

There is still a missing piece of the puzzle. We'll come back to this. Building suspense, that's good writing! 😃 (Smiling Face with Mouth Open emoji, U+1F603)

⛅

🎈

🌲 🏃 🏡 🌲🌲 🌲So What Are Emoji?

We've already mentioned the Unicode Consortium, the non-profit responsible for the Unicode standard.

There's a subcommittee of the Unicode Consortium dedicated to emoji, called, unsurprisingly, the Unicode Emoji Subcommittee. As with the rest of the Unicode standard, the Unicode Consortium and its website (unicode.org) are the authoritative source for information about emoji. Fortunately for us, it provides a wealth of accessible information, as well as some more formal technical documents, which can be a little harder to follow. An example of the former is its "Emoji and Dingbats" FAQ.

Q: What are emoji?

A: Emoji are "picture characters" originally associated with cellular telephone usage in Japan, but now popular worldwide. The word emoji comes from the Japanese 絵 (e ≅ picture) + 文字 (moji ≅ written character).

Note: See those Japanese characters in this primarily English-language document? Thanks Unicode!

Emoji are often pictographs — images of things such as faces, weather, vehicles and buildings, food and drink, animals and plants — or icons that represent emotions, feelings, or activities. In cellular phone usage, many emoji characters are presented in color (sometimes as a multicolor image), and some are presented in animated form, usually as a repeating sequence of two to four images — for example, a pulsing red heart.

Q: Do emoji characters have to look the same wherever they are used?

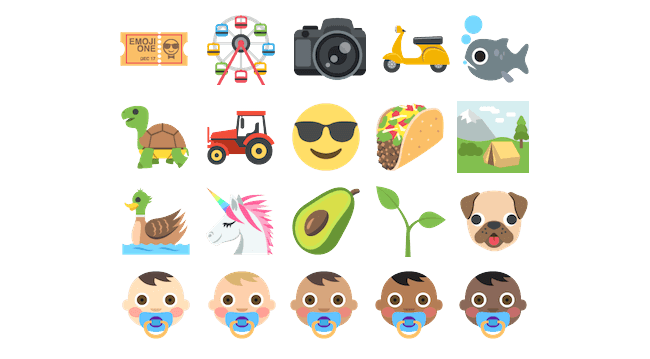

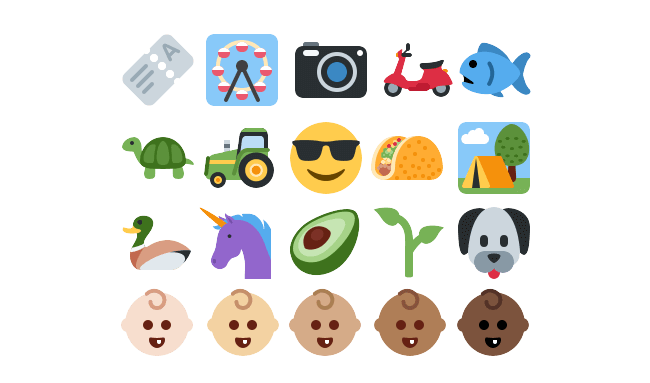

A: No, they don't have to look the same. For example, here are just some of the possible images for U+1F36D LOLLIPOP, U+1F36E CUSTARD, U+1F36F HONEY POT, and U+1F370 SHORTCAKE:

In other words, any pictorial representation of a lollipop, custard, honey pot or shortcake respectively, whether a line drawing, gray scale, or colored image (possibly animated) is considered an acceptable rendition for the given emoji. However, a design that is too different from other vendors' representations may cause interoperability problems: see Design Guidelines in UTR #51.

Read through just that one FAQ — and this article, of course ? (Grinning face with Smiling Eyes emoji, U+1F601) — and you'll have a better handle on emoji than most people ever will.

How Do We Use Emoji?

The short answer is, the same way we use every other character. As we've already discussed, emoji are symbols associated with code points. What's special about them is just semantics — i.e. the meaning we ascribe to them, not the mechanics of them.

If you have a key on a keyboard mapped to a particular character, producing that character is as simple as pressing the key. However, considering that, as we've seen, more than 120,000 characters are currently in use, and the space defined by Unicode allows for more than 1.1 million of them, creating a keyboard large enough to assign a character to each key is probably not a good strategy.

Aside: It's worth noting that there are emoji keyboards — both the software keyboards like those you'll find on touch devices such as smartphones and at least one physical keyboard as well. The "Emoji Keyboard," a product of a company named EmojiWorks, is a Bluetooth wireless keyboard for Windows, macOS and iOS that combines a standard English-language layout with special emoji keys, labels and modifiers. From EmojiWorks' website:

TYPE OVER 120 NEW EMOJI like poo, heart, monkey, kiss, 100, unicorn ?, and skin tone modifiers faster than a touchscreen right on your keyboard keys.

This is not an endorsement of the Emoji Keyboard, which I have never used. In fact, the description of the product hints at the limitations of the very idea. Though there are well over 1800 emoji at present, this physical keyboard allows for direct entry of only 120 of them, even while assigning multiple emoji to the same keys. 🙃 (Upside-down Face, U+1F643)

When we exhaust the reach of our keyboards, we can use a software utility to insert characters.

Recent versions of macOS include an "Emojis and Symbols" panel that can be accessed from anywhere in the OS (via the menu bar or the keyboard shortcut Control + Command + Space). Other OS' offer similar capabilities to view and click or to copy and paste emoji into text-entry fields. Applications may offer additional app-specific features for inserting emoji beyond the system-wide utilities.

Note: The current version of Apple's desktop OS has officially been rebranded as "macOS" with version 10.12 (macOS Sierra), bringing it inline with the naming scheme of the company's other platforms: iOS, tvOS, watchOS. The previous version, 10.11 (El Capitan), was officially "OS X," which had been the name of Apple's desktop OS since version 10.8 (Mountain Lion). Earlier versions were known as "Mac OS X." Throughout this article I will use "macOS" generally as well as for references to the current version. When referring to a prior release of the OS, I will use the name appropriate to that version.

Lastly, we can take advantage of character references to enter emoji (and any other character we like, for that matter) on the web.

Character References

Character references are commonly used as a way to include syntax characters as part of the content of an HTML document, and also can be used to input characters that are hard to type or not available otherwise.

Note: I'm sure many of you are familiar with character references. But if you keep reading this section, it wouldn't surprise me if you learn something new.

HTML is a markup language, and, as such, HTML documents contain both content and the instructions describing the document together as plain text in the document itself. Typically, the vast majority of characters are part of the document's content. However, there are other "special" characters in the mix. In HTML, these form the tags corresponding to the HTML elements that define the structure and semantics of the document. Moreover, it's worth taking a moment to recognize that the syntax itself — i.e. its implementation — creates a need for additional markup-specific characters. The ampersand is a good example. The ampersand (&) is special because it marks the beginning of all other character references. If the ampersand itself were not treated specially, we'd need another mechanism altogether.

Syntax characters are treated as special, and should never be used as part of the content of a document, because they are always interpreted specially, regardless of the author's intent. Mistakenly using these characters as content makes it difficult or impossible for a browser or other user agent to parse the document correctly, leading to all sorts of structural and display issues. But aside from their special status, markup-related characters are characters like any other, and very often we need to use them as content. If we can't type the literal characters, then we need some other way to represent them. We can use character references for this, referred to as "escaping" the character, as in getting outside of (i.e. escaping) the character's markup-specific meaning.

What characters need to be escaped? According to the W3C three characters should always be escaped: the less-than symbol (<, <), the greater-than symbol (>, >) and the ampersand (&, &) — just those three.

Two others, the double quote (", ") and single quote (', ') are often escaped based on context; in particular, when they appear as part of the value of an attribute and the same character is used as the delimiter of the attribute value.

Note: Even this is a bit of a fib, though it comes from a typically reliable source. To be safe, we can always escape those characters. But in fact, because of the way document parsing works, we can often get away without escaping one or more of them.

Aside: More about context-specific characters: If you've been around web design and development for even a little while, you have probably read something much like what I've written above many times. Words like "special" are thrown around as if their inherent specialness is a property of the universe. The problem with that way of thinking is that it impedes the sort of understanding that comes from discovering where a thing is documented, and eventually figuring out how it works. Unless we get into the habit of looking for authoritative information, it's easy to be misled and contribute to the sorts of problems we're trying to avoid. In fact, this seemingly innocuous issue has been, and no doubt will continue to be, a matter of not just healthy debate but also of futile bickering within the community.Let's start with the W3C's answer. The document "Using Character Escapes in Markup and CSS" tells us:

There are three characters that should always appear in content as escapes, so that they do not interact with the syntax of the markup. These are part of the language for all documents based on XML and for HTML.

You may also want to represent the double-quote (") as " and the single quote (') as ' — particularly in attribute text when you need to use the same type of quotes as those that surround the attribute value.

But we can do even better than that. Rather than take at face value what we read in some W3C informational document, we can dig deeper for an authoritative answer to the question "Why must these characters be escaped?" or alternatively, "What is it about the HTML5 specification that requires these characters to be escaped?"

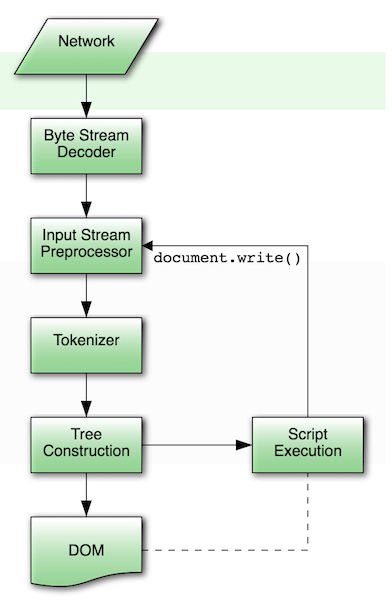

For an authoritative answer to those questions, we can consult the HTML5 spec itself and gain some insight into HTML5 parsing as prescribed by the standard, discussed in "Section 12.2: Parsing HTML Documents." From Section 12.2.1: Overview of the Parsing Model:

The input to the HTML parsing process consists of a stream of Unicode code points, which is passed through a tokenization stage followed by a tree construction stage. The output is a Document object.

The special status of these characters is dictated by the HTML5 specification and enforced by the tokenization process. According to the HTML5 spec, implementations must behave as if operating as a state machine while tokenizing an HTML document.

The concept of a state machine comes to us from computer science and is, generally speaking, an abstract model of computing in which a theoretical machine (the state machine) can only exist in one of a finite number of states. In each state, the machine performs one of some number of actions in response to an event or trigger (e.g. transitioning into the state, receiving input, etc.), and then possibly transitions into a new state, where it will behave differently, according to the rules associated with that new state (the rules of the original state no longer being applicable).

Tokenization is a process whereby a continuous stream of text is broken up into a collection of "tokens," which are meaningful words or word phrases, for further processing. This is very much like the way we, as natural-language parsers, break up the stream of characters in an article like this one into meaningful chunks — words, phrases, sentences and so on.

It is this state machine behavior and the tokenization process that explains why we must escape syntax characters, and when we don't need to. Encountering a "special" character causes the parser to change states.

From "Section 12.2.4 Tokenization":

Implementations must act as if they used the following state machine to tokenize HTML. The state machine must start in the data state. Most states consume a single character, which may have various side-effects, and either switches the state machine to a new state to reconsume the current input character, or switches it to a new state to consume the next character, or stays in the same state to consume the next character. Some states have more complicated behaviour and can consume several characters before switching to another state. In some cases, the tokenizer state is also changed by the tree construction stage.

When a state says to reconsume a matched character in a specified state, that means to switch to that state, but when it attempts to consume the next input character, provide it with the current input character instead.

The exact behavior of certain states depends on the insertion mode and the stack of open elements. Certain states also use a temporary buffer to track progress, and the character reference state uses a return state to return to the state it was invoked from.

The output of the tokenization step is a series of zero or more of the following tokens: DOCTYPE, start tag, end tag, comment, character, end-of-file. …

Note: I said that we can sometimes get away without escaping markup-related characters — the greater-than character (>), for example. The description above of the HTML5 tokenization process hints at why that is. In order for the > to be interpreted as special, the tokenizer must have previously seen a less-than symbol (<) to go through the transitions necessary to put it in a state where > is recognized as "special." A standalone greater-than symbol will not itself initiate that sequence of steps.

If you're interested in a more detailed run-through of just how complicated and fiddly the exceptions to the syntax-character rules can get in practice, have a look at the blog post "Ambiguous Ampersands," in which Mathias Bynens considers precisely when these characters must be escaped and when they don't need be in practice.

But here's the thing: These types of liberties can, and frequently do, cascade through our markup when we inevitably make other mistakes. For that reason alone, you may want to stick to the advice from the W3C. Not only is it the safer approach, it's a lot easier to remember, and that's not a bad thing.

If you're still not convinced about the benefits of preferring overly conservative general rules in the name of consistency, and about sparing yourself from the technical details of arcane exceptions, then take a look at "Section 12.1.2.4 Optional Tags," where it is explained exactly when and under what circumstances "certain tags can be omitted."In this section, we learn that many of the tags we are accustomed to thinking of as mandatory are, in fact, optional. It is not that the elements aren't required. On the contrary, the elements are absolutely required, and because they are required, the associated tags are implied if left out and, so, optional. Though leaving these tags out will not affect the validity of the HTML document, it may or may not affect the DOM , depending on the precise structure of the raw markup. See how simple? ? 😖 (Confounded Face, U+1F616)

We have this example from the spec:

<!DOCTYPE HTML>

<title>Hello</title>

<p>Welcome to this example.

This would be equivalent to this document, with the omitted tags shown in their parser-implied positions; the only white-space text node that results from this is the new line at the end of the head element:

<!DOCTYPE HTML>

<html><head><title>Hello</title>

</head><body><p>Welcome to this example.</p></body></html>

Even if this does not come as a surprise to you, I wouldn't bet on the likelihood of getting this exactly right every time, and then keeping track of these optional tags throughout your documents. The whole thing strikes me as being counterproductive. A standards-compliant browser or other user agent will get it right every time, unless we get it wrong first. Its knowledge of the specs may be programmed in, and its recognition nearly instantaneous and flawless. Ours isn't. It's probably better to err on the side of the simplicity that comes with fewer, more generalizable rules and patterns.

In addition to these few syntax characters, there are similar references for every other Unicode character as well, all 120,000+ of them. These references come in two types:

* named character references (named entities) * numeric character references (NCRs)

Note: It is perfectly confusing that the term "numeric character reference" is abbreviated NCR, which could just as easily be used as the abbreviation for named character reference. 😩 (Weary Face, U+1F629)

Named character references

Named character references (also known as named entities, entity references or character entity references) are pre-defined word-like references to code points. There are quite a few of them. The WHATWG provides a handy comprehensive table of character reference names supported by HTML5, listing 2,231 of them. That's far, far more than you will ever likely see used in practice. After all, the idea is that these names will serve as mnemonics (memory aids). But it's difficult to remember 2,231 of anything. If you don't know that a named reference exists, you won't use it.

But where does this information come from? How can we be certain that the information in the table referenced above, which I describe as "comprehensive," is in fact comprehensive? Not only are those perfectly valid questions, it's exactly the type of questioning we need more of, to cut through the clutter of hearsay and other misinformation that is all too common online.

The very best sources of authoritative information are the specifications themselves, and that "handy, comprehensive table" is in fact is a link to section 12.5 of the WHATWG's HTML5 spec.

You may have heard the number 252, as in, "There are 252 named entities." This comes from HTML 4.x and, more specifically, the HTML 4.x document type definitions (DTDs).From the Wikipedia article "List of XML and HTML Character Entity References":

The HTML 4 DTDs define 252 named entities, references to which act as mnemonic aliases for certain Unicode characters.

You'll find the list of those named entities in the Wikipedia article, although, because we're dealing with HTML5, which does not use the HTML 4.x DTDs, that's probably unimportant at best, and misleading at worst.

Let's say you wanted to include a greater-than symbol (>) in the content of your document.

As we have just seen, and as you probably already knew, we can't just press the key bearing that symbol on your keyboard. That literal character is special and may be treated as markup, not content. There is a named character reference for the greater-than symbol. It looks like >. Typing that in your document will get you the character you're looking for every time, >.

But if > is treated as an entity, how would one (how did I) type that entity reference without having it be replaced by the corresponding character? Well, we use an entity reference for the leading ampersand (a reserved character). So, to have the entity reference appear on the page, rather than the greater-than symbol itself, you would type & followed by gt;. (By the way, to get this &, I had to type &amp;.) Situations like this are the reason why we have so many facial expression emoji. 😓 (Face with Cold Sweat U+1F613)

So, in summary, named entities are predefined mnemonics for certain Unicode characters.

Essentially, a group of people thought it would be nice if we could refer to & as &, so they arranged to make that possible, and now & corresponds to the code point for &.

Numeric Character References

There isn't a named reference for every Unicode character. We just saw that there are 2,231 of them. That's a lot, but certainly not all. You won't find any emoji in that list, for one thing. But while there are not always named references, there are always numeric character references. We can use these for any Unicode character, both those that have named references and those that don't.

An NCR is a reference that uses the code point value in decimal or hexadecimal form. Unlike the names, there is nothing easy to remember about these.

We've looked at the ampersand (&), for which there is a named character reference, &. We can also write this as a numeric reference in decimal or hexadecimal form:

* decimal: `&` (`&`) * hexadecimal: `&` (`&`)

Note: The # indicates that what follows is a numeric reference, and #x indicates that the numeric reference is in hexadecimal notation.

You'll find a table containing a complete list of emoji in the document "Full Emoji Data," maintained by the Unicode Consortium's Emoji Subcommittee. It includes several different representations of each emoji for comparison and, importantly, the Unicode code point in hexadecimal form, as well as the name of the character and some additional information. It's a great resource and makes unnecessary any number of other websites that often contain incomplete, out of date or otherwise partially inaccurate information.

Note: If you look carefully, you might spot some unfamiliar emoji in this list. As I write this, the list includes new emoji from the just recently released Unicode Version 9.0. So, you can find 🤠 Face with Cowboy Hat (U+1F920), 🤷 Shrug (U+1F937), 🤞 Selfie (U+1F91E), 🥔 Potato (U+1F954) and 68 others from the newest version of Unicode.

To look at just a few:

- 💩 (

💩): Pile of Poo - 🍩 (

🍩): Doughnut (that's one delicious-looking emoji) - 🤸 (

🤸): Person Doing a Cartwheel (new with version 9)

Are you seeing a box or other generic symbol, rather than an image for the last emoji in the list (and the preceding paragraph as well)? That makes perfect sense, if you're reading this around the time I wrote it. In fact, it would be more remarkable if you were seeing emoji there. That last emoji, Person Doing a Cartwheel (U+1F938), is new as of Unicode Version 9.0, released on 21 June 2016. It isn't surprising at all if your platform or application doesn't yet support an emoji released within the past several days (or weeks). Of course, as this newest release of Unicode begins to roll out, Version 9.0's 72 new emoji will begin to appear, including ? (Person Doing a Cartwheel, U+1F938), the symbol for which will automatically replace the blank here and in the list above.

Do we really have to type in these references? No, if the platform or application you're using allows you to enter emoji in some other way, that will work just fine. In fact, it's preferred. Emoji are not syntax characters and so can always be entered directly.

Here's a basketball emoji I inserted into this document from OS X's "Emojis and Symbols" panel: 🏀 (Basketball, U+1F3C0).

If I pause for a moment to think, it's pretty cool that these emoji show up as images in plain-text editors. Of course, it makes perfect sense, emoji are characters every bit as much as the Latin alphabet letter "a." On the other hand, there's an image in my plain-text document!

This brings up a more general question, "If there's a reference for every Unicode character, when should we use them?"

The W3C provides us with a perfectly reasonable, well-justified answer to this question in a Q&A document titled "Using Character Escapes in Markup and CSS". The short version of its answer to the question of when should we use character references is, as little as possible:

When not to use escapes It is almost always preferable to use an encoding that allows you to represent characters in their normal form, rather than using character entity references or NCRs. Using escapes can make it difficult to read and maintain source code, and can also significantly increase file size. Many English-speaking developers have the expectation that other languages only make occasional use of non-ASCII characters, but this is wrong. Take for example the following passage in Czech. Jako efektivnější se nám jeví pořádání tzv. Road Show prostřednictvím našich autorizovaných dealerů v Čechách a na Moravě, které proběhnou v průběhu září a října. If you were to require NCRs for all non-ASCII characters, the passage would become unreadable, difficult to maintain and much longer. It would, of course, be much worse for a language that didn't use Latin characters at all. Jako efektivnĕjší se nám jeví pořádání tzv. Road Show prostřednictvím našich autorizovaných dealerů v Čechách a na Moravě, které proběhnou v průběhu září a října. As we said before, use characters rather than escapes for ordinary text.

So, we should only use character references when we absolutely must, such as when escaping markup-specific characters, but not for "ordinary text" (including emoji). Still, it is nice to know that we can always use a numeric character reference to input any Unicode character at all into our HTML documents. Literally, a world 🌎 (Earth Globe Americas, U+1F30E) of characters are open to us.

The final point I will make before moving on has to do with case-sensitivity. This is another one of those issues about which there is much confusion and debate, despite the fact that it is not open to interpretation.

Character References and Case-Sensitivity

Named character references are case-sensitive and must match the case of the names given in the table of named character references supported by HTML that is part of the HTML5 spec. Having said that, if you look at the named references in that table carefully, you will see more than one name that maps to the same code point (and, so, the same character).

Take the ampersand. You'll find the following four entries in the table for this single character:

AMP;—U+00026(&)AMP—U+00026(&)amp;—U+00026(&)amp—U+00026(&)

This may be where some of the confusion originates. One could easily be fooled into assuming that this simulated, limited case-insensitivity is the real thing. But it would be a mistake to think that these kinds of variations are consistent. They definitely are not. For example, the one and only one valid named reference for the backslash character is Backslash;.

Backslash;—U+02216(∖)

You won't find any other references to U+02216 in that table, and so no other form is valid.

If we look closely at the four named entities for the ampersand (U+00026) again, you'll see that half of them include a trailing semicolon (;) and the other half don't. This, too, may have led to confusion, with some people mistakenly believing that the semicolon is optional. It isn't. There are some explicitly defined named character references, such as AMP and amp, without it, but the vast majority of named references and all numeric references include a semicolon. Furthermore, none of the named references without the trailing semicolon can be used in HTML5. 😮 (Face with Mouth Open, U+1F62E) Section 12.1.4 of the HTML5 specification tells us (emphasis added):

Character references must start with a U+0026 AMPERSAND character (&). Following this, there are three possible kinds of character references:

Named character references

The ampersand must be followed by one of the names given in the named character references section, using the same case. The name must be one that is terminated by a U+003B SEMICOLON character (;).

Decimal numeric character reference

The ampersand must be followed by a U+0023 NUMBER SIGN character (#), followed by one or more ASCII digits, representing a base-ten integer that corresponds to a Unicode code point that is allowed according to the definition below. The digits must then be followed by a U+003B SEMICOLON character (;).

Hexadecimal numeric character reference

The ampersand must be followed by a U+0023 NUMBER SIGN character (#), which must be followed by either a U+0078 LATIN SMALL LETTER X character (x) or a U+0058 LATIN CAPITAL LETTER X character (X), which must then be followed by one or more ASCII hex digits, representing a hexadecimal integer that corresponds to a Unicode code point that is allowed according to the definition below. The digits must then be followed by a U+003B SEMICOLON character (;).

"The ampersand must be followed by one of the names given in the named character references section, using the same case. The name must be one that is terminated by a U+003B SEMICOLON character (;)."

That answers that.

By the way, the alpha characters in hexadecimal numeric character references (a to f, A to F) are always case-insensitive.

⛅

🎈

🌲 🏃 🏡 🌲🌲 🌲Glyphs

At the end of the encoding section, I asked whether Unicode and UTF-8 encoding are enough to ensure that our documents, interfaces and all of the characters in them will display properly for all visitors to our websites and all users of our applications. The answer was no. But if you remember, I left this as a cliffhanger. 😯 (Hushed Face, U+1F62F) There's just one thing left to complete our picture of emoji (pun intended) 😬 (Grimacing Face U+1F62C).

Unicode and UTF-8 do most of the heavy lifting, but something is missing. We need a glyph associated with every character in order to be able to see a representation of the character.

From the Wikipedia entry for glyph:

In typography, a glyph /'ɡlɪf/ is an elemental symbol within an agreed set of symbols, intended to represent a readable character for the purposes of writing and thereby expressing thoughts, ideas and concepts. As such, glyphs are considered to be unique marks that collectively add up to the spelling of a word, or otherwise contribute to a specific meaning of what is written, with that meaning dependent on cultural and social usage. For example, in most languages written in any variety of the Latin alphabet the dot on a lower-case i is not a glyph because it does not convey any distinction, and an i in which the dot has been accidentally omitted is still likely to be recognized correctly. In Turkish, however, it is a glyph because that language has two distinct versions of the letter i, with and without a dot.

The relationship between the terms glyph, font and typeface is that a glyph is a component of a font that is composed of many such glyphs with a shared style, weight, slant and other characteristics. Fonts, in turn, are components of a typeface (also known as a font family), which is a collection of fonts that share common design features but each of which is distinctly different.

Essentially, we need a font that includes a representation for the code point of the emoji we want to display. If we don't have that symbol we'll get a blank space, an empty box or some other generic character as an indication that the symbol we're after isn't available. You've probably seen this. Now you understand why, if you didn't before.

Going back to the figure from the W3C's "Character Encodings: Essential Concepts," the original diagram featured the following five characters:

| Character | ⴰ | ⵣ | ⵓ | ⵍ | ! |

|---|---|---|---|---|---|

| Name | Tifinagh Letter Ya | Tifinagh Letter Yaz | Tifinagh Letter Yu | Tifinagh Letter Yal | Exclamation Point |

| Code point | U+2D30 |

U+2D63 |

U+2D53 |

U+2D4D |

U+0021 |

| NCR | ⴰ |

ⵣ |

ⵓ |

ⵍ |

! |

What would happen if you tried to insert those characters into a web page using a numeric character reference? Let's see:

* ⴰ (`ⴰ`) * ⵣ (`ⵣ`) * ⵓ (`ⵓ`) * ⵍ (`ⵍ`) * ! (`!`)

Chances are you can see the exclamation point, but some of the others might be missing, replaced by a box or other generic symbol.

As has already been mentioned, those symbols are Tifinagh characters. Tifinagh is "a series of abjad and alphabetic scripts used to write Berber languages." According to Wikipedia: