Imagine that this is what you know about me: I am a college-educated male between the ages of 35 and 45. I own a MacBook Pro and an iPhone 5, on which I browse the Internet via the Google Chrome browser. I tweet and blog publicly, where you can discover that I like chocolate and corgis. I’m married. I drive a Toyota Corolla. I have brown hair and brown eyes. My credit-card statement shows where I’ve booked my most recent hotel reservations and where I like to dine out.

If your financial services client provided you with this data, could you tell them why I’ve just decided to move my checking and savings accounts from it to a new bank? This scenario might seem implausible when laid out like this, but you’ve likely been in similar situations as an interactive designer, working with just demographics or website usage metrics.

Further Reading on SmashingMag:

- Facing Your Fears: Approaching People For Research

- A Closer Look At Personas

- The Rainbow Spreadsheet: A Collaborative Lean UX Research Tool

- How Copywriting Can Benefit From User Research

We can discern plenty of valuable information about a customer from this data, based on what they do and when they do it. That data, however, doesn’t answer the question of why they do it, and how we can design more effective solutions to their problems through our clients’ websites, products and services. We need more context. User research helps to provide that context.

User research helps us to understand how other people live their lives, so that we can respond more effectively to their needs with informed and inspired design solutions. User research also helps us to avoid our own biases, because we frequently have to create design solutions for people who aren’t like us.

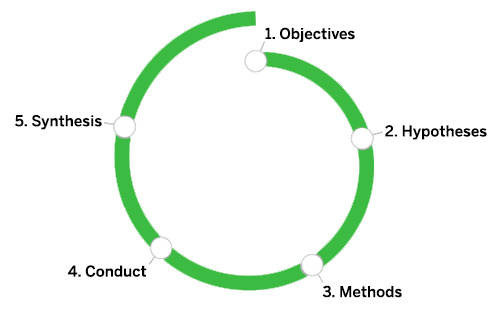

So, how does one do user research? Let me share with you a process we use at Frog to plan and conduct user research. It’s called the “research learning spiral.” The spiral was created by Erin Sanders, one of our senior interaction designers and design researchers. It has five distinct steps, which you go through when gathering information from people to fill a gap in your knowledge.

“The spiral is based on a process of learning and need-finding,” Sanders says. “It is built to be replicable and can fit into any part of the design process. It is used to help designers answer questions and overcome obstacles when trying to understand what direction to take when creating or moving a design forward.”

The first three steps of the spiral are about formulating and answering questions, so that you know what you need to learn during your research:

- Objectives These are the questions we are trying to answer. What do we need to know at this point in the design process? What are the knowledge gaps we need to fill?

- Hypotheses These are what we believe we already know. What are our team’s assumptions? What do we think we understand about our users, in terms of both their behaviors and our potential solutions to their needs?

- Methods These address how we plan to fill the gaps in our knowledge. Based on the time and people available, what methods should we select?

Once you’ve answered the questions above and factored them into a one-page research plan that you can present to stakeholders, you can start gathering the knowledge you need through the selected research methods:

- Conduct Gather data through the methods we’ve selected.

- Synthesize Answer our research questions, and prove or disprove our hypotheses. Make sense of the data we’ve gathered to discover what opportunities and implications exist for our design efforts.

You already use this process when interacting with people, whether you are consciously conducting research or not. Imagine meeting a group of 12 clients who you have never worked with. You wonder if any of them has done user research before. You believe that only one or two of them have conducted as much user research as you and your team have. You decide to take a quick poll to get an answer to your question, asking everyone in the room to raise their hand if they’ve ever conducted user research. Five of them raise their hands. You ask them to share what types of user research they’ve conducted, jotting down notes on what they’ve done. You then factor this information into your project plan going forward.

In a matter of a few minutes, you’ve gone through the spiral to answer a single question. However, when you’re planning and conducting user research for an interactive project or product, each step you take through the spiral will require more time and energy, based on the depth and quantity of questions you need to answer. So, let’s take an in-depth spin through the research learning spiral. At each step of the spiral, I’ll share some of the activities and tools I use to aid my teams in managing the complexity of planning and conducting user research. I’ll also include a sample project to illustrate how those tools can support your team’s user research efforts.

1. Objectives: The Questions We Are Trying To Answer

Imagine that you’re in the middle of creating a next-generation program guide for TV viewers in Western Europe. Your team is debating whether to incorporate functionality for tablet and mobile users that would enable them to share brief clips from shows that they’re watching to social networks, along with their comments.

“Show clip sharing,” as the team calls it, sounds cool, but you aren’t exactly sure who this feature is for, or why users would want to use it.

Step back from the wireframing and coding, sit down with your team, and quickly discuss what you already know and understand about the product’s goal. To facilitate this discussion, ask your team to generate a series of framing questions to help them identify which gaps in knowledge they need to fill. They would write these questions down on sticky notes, one question per note, to be easily arranged and discussed.

These framing questions would take a “5 Ws and an H” structure, similar to the questions a reporter would need to answer when writing the lede of a newspaper story:

- “Who?” questions help you to determine prospective audiences for your design work, defining their demographics and psychographics and your baseline recruiting criteria.

- “What?” questions clarify what people might be doing, as well as what they’re using in your website, application or product.

- “When?” questions help you to determine the points in time when people might use particular products or technologies, as well as daily routines and rhythms of behavior that might need to be explored.

- “Where?” questions help you to determine contexts of use — physical locations where people perform certain tasks or use key technologies — as well as potential destinations on the Internet or devices that a user might want to access.

- “Why?” questions help you to explain the underlying emotional and rational drivers of what a person is doing, and the root reasons for that behavior.

- “How?” questions help you go into detail on what explicit actions or steps people take in order to perform tasks or reach their goals.

In less than an hour, you and your team can generate a variety of framing questions, such as:

- “Who would share program clips?”

- “How frequently would viewers share clips?”

- “Why would people choose to share clips?”

Debate which questions need to be answered right away and which would be valuable to consider further down the road. “Now is your time to ask the more ‘out there’ questions,” says Lauren Serota, an associate creative director at Frog. “Why are people watching television in the first place? You can always narrow the focus of your questions before you start research… However, the exercise of going lateral and broad is good exercise for your brain and your team.”

When you have a good set of framing questions, you can prioritize and cluster the most important questions, translating them into research objectives. Note that research objectives are not questions. Rather, they are simple statements, such as: “Understand how people in Western Europe who watch at least 20 hours of TV a week choose to share their favorite TV moments.” These research objectives will put up guardrails around your research and appear in your one-page research plan.

Don’t overreach in your objectives. The type of questions you want to answer, and how you phrase them as your research objective, will serve as the scope for your team’s research efforts. A tightly scoped research objective might focus on a specific set of tasks or goals for the users of a given product (“Determine how infrequent TV viewers in Germany decide which programs to record for later viewing”), while a more open-ended research objective might focus more on user attitudes and behaviors, independent of a particular product (“Discover how French students decide how to spend their free time”). You need to be able to reach that objective in the time frame you have alloted for the research.

2. Hypotheses: What We Believe We Already Know

You’ve established the objectives of your research, and your head is already swimming with potential design solutions, which your team has discussed. Can’t you just go execute those ideas and ship them?

If you feel this way, you’re not alone. All designers have early ideas and assumptions about their product. Some clients may have initial hypotheses that they would like “tested” as well.

“Your hypotheses often constitute how you think and feel about the problem you’ve been asked to solve, and they fuel the early stages of work,” says Jon Freach, a design research director at Frog. Don’t be afraid to address these hypotheses and, when appropriate, integrate them into your research process to help you prove or disprove their merit. Here’s why:

- Externalizing your hypotheses is important to becoming aware of and minimizing the influence of your team’s and client’s biases.

- Being aware of your hypotheses will help you select the right methods to fulfill your research objective.

- You can use your early hypotheses to help communicate what you’ve discovered through the research process. (“We believed that [insert hypothesis], but we discovered that [insert finding from research].”)

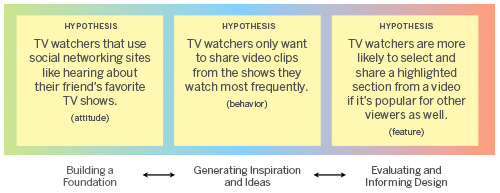

Generating research hypotheses is easy. Take your framing questions from when you formulated the objective and, as a team, spend five to eight minutes individually sketching answers to them, whether by writing out your ideas on sticky notes, sketching designs and so forth. For example, when thinking about the clip-sharing feature for your next-generation TV program guide, your team members would put their heads together and generate hypotheses such as these:

- Attitude-related hypothesis. “TV watchers who use social networks like to hear about their friends’ favorite TV shows.”

- Behavior-related hypothesis. “TV watchers only want to share clips from shows they watch most frequently.”

- Feature-related hypothesis. “TV watchers are more likely to share a highlight from a show if it’s popular with other viewers as well.”

3. Methods: How We Plan To Fill The Gaps In Our Knowledge

Once you have a defined research objective and a pile of design hypotheses, you’re ready to consider which research methods are most appropriate to achieving your objective. Usually, I’ll combine methods from more than one of the following categories to achieve my research objective. (People have written whole books about this subject. See the end of this article for further reading on user research methods and processes.)

Building a Foundation

Methods in this area could include surveys, observational or contextual interviews, and market and trend explorations. Use these methods when you don’t have a good understanding of the people you are designing for, whether they’re a niche community or a user segment whose behaviors shift rapidly. If you have unanswered questions about your user base — where they go, what they do and why — then you’ll probably have to draw upon methods from this area first.

Generating Inspiration and Ideas

Methods in this area could include diary studies, card sorting, paper prototyping and other participatory design activities. Once I understand my audience’s expertise and beliefs well, I’m ready to delve deeper into what content, functionality or products would best meet their needs. This can be done by generating potential design solutions in close collaboration with research participants, as well as by receiving their feedback on early design hypotheses.

Specifically, we can do this by generating or co-creating sketches, collages, rough interface examples, diagrams and other types of stimuli, as well as by sorting and prioritizing information. These activities will help us understand how our audience views the world and what solutions we can create to fit that view (i.e. “mental models”). This helps to answer our “What,” “Where,” “When” and “How” framing questions. Feedback at this point is not meant to refine any tight design concepts or code prototypes. Instead, it opens up new possibilities.

Evaluating and Informing Design

Methods in this area could include usability testing, heuristic evaluations, cognitive walkthroughs and paper prototyping. Once we’ve identified the functionality or content that’s appropriate for a user, how do we present it to them in a manner that’s useful and delightful? I use methods in this area to refine design comps, simulations and code prototypes. This helps us to answer questions about how users would want to use a product or to perform a key task. This feedback is critical and, as part of an iterative design process, enables us to refine and advance concepts to better meet user needs.

Let’s go back to our hypothetical example, so that you can see how your research objective and hypotheses determine which methods your team will select. Take all of your hypotheses — I like to start with at least 100 hypotheses — and arrange them on a continuum:

On the left, place hypotheses related to who your users are, where they live and work, their goals, their needs and so forth. On the right, place hypotheses that have to do with explicit functionality or design solutions you want to test with users. In the center, place hypotheses related to the types of content or functionality that you think might be relevant to users. This point of this activity is not to create an absolute scale or arrangement of hypotheses that you’ve created so far. The point is for your team to cluster the hypotheses, finding important themes or affinities that will help you to select particular methods. Serota says:

“Choosing and refining your methods and approach is a design project within itself. It takes iteration, practice and time. Test things out on your friends and coworkers to see what works and the best way to ask open-ended questions.”

Back to our clip-sharing research effort. When your team looks at all of the hypotheses you’ve created to date, it will realize that using two research methods would be most valuable. The first method will be a participatory design activity, in which you’ll create with users a timeline of where and when they share their favorite TV moments with others. This will give your team foundational knowledge of situations in which clips might be shared, as well as generate opportunities for clip-sharing that you can discuss with users.

The second method will be an evaluative paper-prototyping activity, in which you will present higher-fidelity paper prototypes of ideas on how people can share TV clips. This method will help you address your hypotheses on what solutions make the most sense in sharing situations. (Using two methods is best because mixing and matching hypotheses across different categories within a research session could confuse research participants.)

4. Conduct: Gather Data Through The Methods We’ve Selected

The research plan is done, and you have laid out your early hypotheses on the table. Now you get to conduct the appropriate research methods. Your team will recruit eight users to meet with for one hour each over three evenings, which will allow you to speak with people when they’re most likely to be watching TV. Develop an interview guide and stimuli, and test draft versions of your activities on coworkers. Then, go into the field to conduct your research.

When you do this, it’s essential that you facilitate the research sessions properly, capturing and analyzing the notes, photos, videos and other materials that you collect as you go.

Serota also recommends thinking on your feet: “It’s all right to change course or switch something up in the field. You wouldn’t be learning if you didn’t have to shift at least a little bit.” Ask yourself, “Am I discovering what I need to learn in order to reach my objective? Or am I gathering information that I already know?” If you’re not gaining new knowledge, then one of the following is probably the reason why:

- You’ve already answered your research questions but haven’t taken the time to formulate new questions and hypotheses in order to dig deeper (otherwise, you could stop conducting research and move immediately into synthesis).

- The people who you believed were the target audience are, in fact, not. You’ll need to change the recruitment process (and the demographics or psychographics by which you selected them).

- Your early design hypotheses are a poor fit. So, consider improving them or generating more.

- The methods you’ve selected are not appropriate. So, adapt or change them.

- You are spending all of your time in research sessions with users, rather than balancing research sessions with analysis of what you’ve discovered.

5. Synthesis: Answer Our Research Questions, And Prove Or Disprove Our Hypotheses

Now that you’ve gathered research data, it’s time to capture the knowledge required to answer your research questions and to advance your design goals. “In synthesis, you’re trying to find meaning in your data,” says Serota. “This is often a messy process — and can mean reading between the lines and not taking a quote or something observed at face value. The why behind a piece of data is always more important than the what.”

The more time you have for synthesis, the more meaning you can extract from the research data. In the synthesis stage, regularly ask yourself and your team the following questions:

- “What am I learning?”

- “Does what I’ve learned change how we should frame the original research objective?”

- “Did we prove or disprove our hypotheses?”

- “Is there a pattern in the data that suggests new design considerations?”

- “What are the implications of what I’m designing?”

- “What outputs are most important for communicating what we’ve discovered?”

- “Do I need to change what design activities I plan to do next?”

- “What gaps in knowledge have I uncovered and might need to research at a later date?”

So, what did your team discover from your research into sharing TV clips? TV watchers do want to share clips from their favorite programs, but they are also just as likely to share clips from programs they don’t watch frequently if they find the clips humorous. They do want to share TV clips with friends in their social networks, but they don’t want to continually spam everyone in their Facebook or Twitter feed. They want to target family, close friends or individuals with certain clips that they, the user believes, would find particularly interesting.

Your team should assemble concise, actionable findings and revise its wireframes to reflect the necessary changes, based on the answers you’ve gathered. Now your team will have more confidence in the solution, and when your designs for the feature have been coded, you’ll take another spin through the research learning spiral to evaluate whether you got it right.

Further Reading On User-Research Practices And Methods

The spiral makes it clear that user research is not simply about card sorting, paper prototyping, usability studies and contextual interviews, per se. Those are just methods that researchers use to find answers to critical questions — answers that fuel their design efforts. Still, understanding what methods are available to you, and mastering those methods, can take some time. Below are some books and websites that will help you dive deeper into the user-research process and methods as part of your professional practice.

- Observing the User Experience, Second Edition: A Practitioner’s Guide to User Research, Elizabeth Goodman, Mike Kuniavsky and Andrea Moed A comprehensive guide to user research. Goes deep into many of the methods mentioned in this article.

- Universal Methods of Design, Bruce Hanington and Bella Martin A comprehensive overview of 100 methods that can be employed at various points in the user-research and design process.

- 101 Design Methods: A Structured Approach for Driving Innovation in Your Organization, Vijay Kumar Places the user research process in the context of product and service innovation.

- Design Library, Austin Center for Design (AC4D) An in-depth series of PDFs and worksheets that cover processes related to user-research planning, methods and synthesis.

(al) (il)